Development

Musk Ox

Musk Ox is a fantastic instrumental chamber folk project from up in Canada. I recently stumbled on these guys and their guitarist Nathanael Larochette from some collaborations he did with the (now defunct, but often fantastic) Oregon-based post metal/neofolk band Agalloch. Here's a neat documentary on the making of their album Woodfall.

Another cool project is the acoustic spin off that Nathanael did from the last Agalloch album.

His solo stuff is great. Music about trees and such.

AI not I

The notion that what we call AI is somehow approaching a form on consciousness remains an absurdity: fantastical thinking by people who really ought to spend a minimal amount of time at least reading up on philosophy of mind. Generative AI fits perfectly into John Searle's Chinese Room (the main variation is probability replaces rules, which reflects the one major innovation of NLP over decades).

I don't mean to suggest the technology is not extremely useful - it is, and will become more so. But: reality check.

A New Product for Podcast Listeners

We're excited to announce our first consumer product.

Introducing Listen Later

Launch Special

Sign up now and get free credits to convert multiple articles into audio and enjoy this unique service.

Why You Will Love Listen Later

Convenience Redefined: Transform articles into podcasts. Listen while you drive, exercise, or relax.

Exceptional Audio Quality: Enjoy natural, human-like AI narration. It's like listening to a storyteller, but powered by cutting-edge technology.

Seamless Experience: Using Listen Later is as easy as sending an email. There is no app to install and it's available on all devices and podcast apps.

Learn More at ListenLater.net

For the time being

Putting the decorations back into their cardboard boxes --

Some have got broken -- and carrying them up to the attic.

The holly and the mistletoe must be taken down and burnt,

And the children got ready for school. There are enough

Left-overs to do, warmed-up, for the rest of the week --

Not that we have much appetite, having drunk such a lot,

Stayed up so late, attempted -- quite unsuccessfully --

To love all of our relatives, and in general

Grossly overestimated our powers. Once again

As in previous years we have seen the actual Vision and failed

To do more than entertain it as an agreeable

Possibility, once again we have sent Him away,

Begging though to remain His disobedient servant,

The promising child who cannot keep His word for long.

The Christmas Feast is already a fading memory,

And already the mind begins to be vaguely aware

Of an unpleasant whiff of apprehension at the thought

Of Lent and Good Friday which cannot, after all, now

Be very far off. But, for the time being, here we all are,

Back in the moderate Aristotelian city

Of darning and the Eight-Fifteen, where Euclid's geometry

And Newton's mechanics would account for our experience,

And the kitchen table exists because I scrub it.

It seems to have shrunk during the holidays. The streets

Are much narrower than we remembered; we had forgotten

The office was as depressing as this. To those who have seen

The Child, however dimly, however incredulously,

The Time Being is, in a sense, the most trying time of all.

For the innocent children who whispered so excitedly

Outside the locked door where they knew the presents to be

Grew up when it opened. Now, recollecting that moment

We can repress the joy, but the guilt remains conscious;

Remembering the stable where for once in our lives

Everything became a You and nothing was an It.

And craving the sensation but ignoring the cause,

We look round for something, no matter what, to inhibit

Our self-reflection, and the obvious thing for that purpose

Would be some great suffering. So, once we have met the Son,

We are tempted ever after to pray to the Father;

"Lead us into temptation and evil for our sake."

They will come, all right, don't worry; probably in a form

That we do not expect, and certainly with a force

More dreadful than we can imagine. In the meantime

There are bills to be paid, machines to keep in repair,

Irregular verbs to learn, the Time Being to redeem

From insignificance. The happy morning is over,

The night of agony still to come; the time is noon:

When the Spirit must practice his scales of rejoicing

Without even a hostile audience, and the Soul endure

A silence that is neither for nor against her faith

That God's Will will be done, That, in spite of her prayers,

God will cheat no one, not even the world of its triumph."

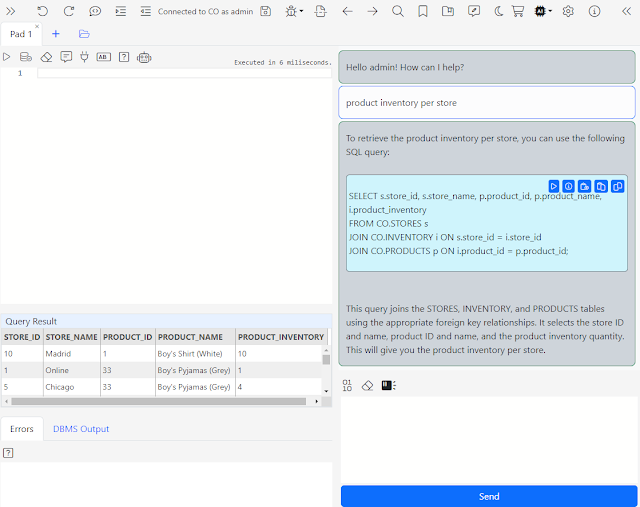

My Coding Experience with an AI Assistant

AI is real and it is here. Its implications for knowledge workers will be enormous, including you.

There is no escaping AI in our line of work. Therefore, while building the new version of Gitora PL/SQL Editor, I’ve fully embraced it.

The last few months have been the most interesting, rewarding and productive period of my professional life. I’ve practically stopped using Google search and StackOverflow, two websites I’d consider essential to my work just a few months ago.

I’ve coded the Gitora PL/SQL debugger virtually without reading a single line of documentation about how to build debuggers in Java or the specifics of how to build a Java debugger for PL/SQL. I am not even sure if there is any documentation available about building a PL/SQL debugger on the Internet.

I just talked about these topics with ChatGPT and analyzed, used the code snippets it produced.

All CSS and almost all of the run-of-the-mill code I needed to write for the debugger and the Gitora AI Assistant for SQL and PL/SQL (oh the irony!) is either written by ChatGPT or GitHub CoPilot. Heck, I even had the the previous sentenced grammatically verified with ChatGPT. I got it right in my first attempt and ChatGPT congratulated me.

After using an AI Assistant for a few months, I cannot imagine coding without it. I view it an amazing junior pair programmer I am working with.

Although useful, ChatGPT and GitHub CoPilot are not as great as they can be while I am working with the Oracle database because they don't know the objects in the schema I am working in.

A context aware AI Assistant which knows about the tables, their relationships and other objects in the database would be immensely helpful. So I decided to build one. Gitora Editor 2.0 is my second iteration of a schema-aware AI Assistant.

If you think this is interesting and useful, you can download the Gitora Editor from this link. I'd appreciate any feedback. This whole thing is pretty new and I am interested in knowing how you are using the Gitora AI Assistant and how you think I can make it better.

Gitora AI Chat: Write SQL with AI

Introducing the Gitora AI Assistant: Write, debug and explain SQL and PL/SQL with the help of AI.

Gitora AI Assistant knows about your tables, their structure and their relationships so that it can give relevant answers.

To learn more and download click at this link.

PL/SQL Editor with AI Assistant

Introducing Gitora PL/SQL AI Assistant. It suggests PL/SQL code in real-time right from the Gitora Editor.

This was one of the MOST INTERESTING features I've ever worked on. The first iteration is a small step in the right direction. But the possibilities are ENDLESS.

I'd truly appreciate it if you could try it out and provide feedback so that I can improve it. Ideas to where to apply AI next are most welcome.

You can download the editor from this link: https://www.gitora.com/plsql_editor.html

New Web Based PL/SQL Editor

We are excited to announce the launch of Gitora 7, which includes a groundbreaking addition to our suite of tools:

The Gitora PL/SQL EditorThe Gitora PL/SQL Editor represents a fresh approach to writing PL/SQL code, drawing on the latest advances in code editors to deliver an unparalleled experience to the PL/SQL community. Packed with modern features that are intuitive and easy to use, this editor represents a significant leap forward in coding in PL/SQL.

Let’s take a quick look at some of the features that make the Gitora PL/SQL Editor so remarkable:

- Beautiful Design

The Gitora PL/SQL Editor boasts a beautiful, modern design that makes it easy on the eyes and intuitive to use. With Bootstrap 5 components and Font Awesome icons, you can rest assured that the editor will be both stylish and functional. - Dark Mode

When you’re working long hours on code, the last thing you want is to strain your eyes. That’s why the Gitora PL/SQL Editor comes with a gorgeous dark mode that is easy on the eyes and perfect for long coding sessions. - Single Page Web Application

Designed from the ground up for a multi-monitor, multi-tab world, the Gitora PL/SQL Editor is a single-page web application that allows you to open multiple editors in many browser tabs across multiple monitors. This means that you can work on complicated tasks without losing your place. - Auto Complete Suggestions

The editor’s smart auto-complete suggestions make it easy to find what you’re looking for. Whether you’re typing package, procedure or function names, the editor will suggest relevant options to help speed up your workflow. - Package (and Object Type) Content Navigator

Navigating between package procedures, and functions is a breeze with the package content navigator. With just a single click, you can easily move between different parts of your code. - Split Editor

The split editor lets you work on two sections of your code simultaneously, making it easy to compare code or write related sections side by side. - Go Back/Forward in the Editor

The editor remembers the locations of your cursor, allowing you to easily navigate back and forth between different parts of your code with just a click, much like a browser’s back/forward buttons. - Go to Definition

With the CTRL+click command, you can quickly go to the definition of any database object in the code, allowing you to move between different parts of your project with ease. - Code Folding

Code folding lets you get a better view of the code structure by folding code blocks, making it easier to read and understand large chunks of code. - Integrated with the Gitora Version Control

Finally, the editor is fully integrated with the Gitora Version Control, allowing you to use it independently or from within the Gitora Version Control application. This makes it easy to manage your code changes and keep track of your work as you go.

We hope you’re as excited about the Gitora PL/SQL Editor as we are! With its many powerful features and intuitive design, we’re confident that it will be a game-changer for Oracle database developers everywhere.

Download Gitora 7 today to see what it can do for you!

Memesis and Desire

"Man is the creature who does not know what to desire, and he turns to others in order to make up his mind. We desire what others desire because we imitate their desires." Rene Girard

Faixa Marrom

Mitsuyo Maeda > Carlos Gracie Sr. > Carlos Gracie Junior > Jean Jacques Machado > Eddie Bravo > Denny Prokopos > Alex Canders

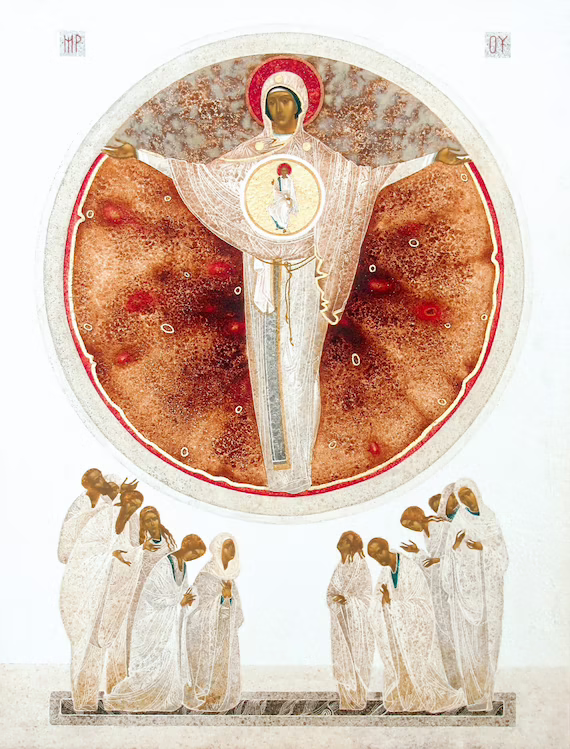

Three Carols for Nativity

Three of outstanding carols for the Christmas season.

1 In the Dark Night

A traditional Ukrainian koliady (carol): this is just heart-rendering in its simple beauty expressed in the Ukrainian language. The theme of a bright light in darkness is particularly poignant as Ukraine itself is presently plunged into darkness by the war. This holiday, I wish for peace: among Ukrainians, with brother Russians, and for the world.

In the dark night, above Bethlehem,a bright star shined out, covering the Holy Land.The Most Pure Virgin, the Holy Bride,in a poor cave gave birth to a Son.[Chorus] Sleep Jesus, sleep my little baby,Sleep my little star,About your fate, my little sweet,To you I will sing.She gently kissed and swaddled him,She put him to bed, and quietly started to sing,You will grow up, my Son, you’ll become a grown-up,And you will go out into the world, my baby.Sleep Jesus, sleep my sweet little baby,Sleep my little star,About your fate, my little sweet,To you I will sing.The Love of the Lord and God’s truth,You will bring faith to the world, to your people,The truth will live on, the shackles of sin will be shattered,[But my child], on Golgotha, my child will die.Sleep Jesus, sleep my sweet little baby,Sleep my little star,About your fate, my little sweet,To you I will sing.Sleep, Jesus, sleep my sweet little baby,Sleep my rose blossom,With hope on YouThe entire world is watching!

2 The Cherry Tree Carol

An Old English carol based on medieval legends about the Holy Family. This version is rendered in modern English and accompanied by a simple harp (Anonymous4 does another version that is a cappella in Old English, but something about this short version with the harp is just pleasant to the ears and to the soul).

3 Georgian Alilo

If you get some Georgians together for a holiday there will be singing (also, alcohol in my experience). I can't understand a word when they do, but its pretty cool.

Since Georgian is such an interesting language, I list here the lyrics / transliteration / translation from comments:

ალილო და ჰოი ალილო და ჰოოalilo da hoi alilo da hooHallelujah Hallelujahქრისტეს მახარობელნი ვართ ქრისტეშობას მოგილოცავთოოkrist’es makharobelni vart krist’eshobas mogilotsavtoo We are heralds of Christ wishing you a Merry Christmasოცდახუთსა დეკემბერსა ქრისტეიშვა ბეთლემშინაოotsdakhutsa dek’embersa krist’eishva betlemshinao On the twenty-fifth of December, Christ was born in Bethlehemანგელოზნი უგალობენ დიდება მაღალთა შინაოangelozni ugaloben dideba maghalta shinao Angels sing praises to the highest of the houseეს რომ მწყემსებმა გაიგგეს მივიდნენ და თავანი სცეს მასes rom mts’q’emsebma gaigges mividnen da tavani stses masPastors heard the good news and they went to worship Him.ვარსკვლავები ბრწყინვალებენ ანათებენ ბეთლემსაოოvarsk’vlavebi brts’q’invaleben anateben betlemsaooThe stars are shining, Illuminating Belém!შორი ქვენიდან მოსულმა მოგვებმა ძღვენი შესწირესshori kvenidan mosulma mogvebma dzghveni shests’iresComing from distant lands, The magicians gave Him a giftქრისტეს მახარობელნი ვართ ქრისტეშობას მოგილოცავთოkrist’es makharobelni vart krist’eshobas mogilotsavtoWe are heralds of Christ wishing you a Merry Christmasოცდახუთსა დეკემბერსა ქრისტე იშვა ბეთლემშინაოotsdakhutsa dek’embersa krist’e ishva betlemshinaoOn the twenty-fifth of December, Christ was born in Bethlehem

While Christmas is properly celebrated on January 7, being an American, I'm stuck with this weekend ending the season. Fortunately I am not stuck with the commercial music/dreck that American culture imposes on the season: the 12 days of Christmas until Epiphany/Theophany are still a good time to continue to enjoy this fine singing with all that behind us.

Using Git with PL/SQL in a Shared Development Database

With Gitora 6, developers can work on the same code base (i.e. a package, procedure etc…) in the same database without blocking each other.

Many development teams use a single database for development. Many of them use the same database for testing, as well. They achieve this by using different schemas for different purposes. Gitora 6 enables these teams to create Git repos for these schemas and pull updates between them.

With Gitora 6, you can even create a different schema for every developer and have them merge their code using Git.

Gitora 6 enables you to implement any modern development workflow in a single database.

How does Gitora 6 work?Gitora 6 introduces a new repo type called Single Schema Repo (SSR). As its name suggests an SSR manages database objects from a single schema. The DDL scripts in SSR’s don’t contain the schema prefixes so that Gitora can execute them in other schemas in the same database.

This enables developers to work on the same package, procedure, function, view etc… (i.e. anything that can be created with the CREATE OR REPLACE command) at the same time, in the same database in different schemas.

An ExampleLet’s go through an example: Let’s assume that the team is working on a logistics software and have a schema named LOGISTICS that stores all their database objects. The team can create a schema (or already have one) called LOGISTICS_TEST in the same database. Here are the steps the team needs to follow so that they can pull their changes to LOGISTICS_TEST.

- Create a single schema Gitora repo that manages the LOGISTICS schema. Let’s call it the REPO_LOGISTICS.

- Add all relevant database objects in the LOGISTICS schema to the LOGISTICS_REPO.

- Create another single schema Gitora repo that manages the LOGISTICS_TEST schema. Let’s call it the REPO_LOGISTICS_TEST

- Pull from the REPO_LOGISTICS to REPO_LOGISTICS_TEST

That’s it. That’s all there is to it. From this point on, any change you make to the code in the LOGISTICS schema can be pulled to the LOGISTICS_TEST schema using Gitora (and vice versa).

Single Schema Repos can also be used to create separate development environments in the same database for every developer in the team.

Multiple Developers, Same DatabaseAssuming we already have the LOGISTICS schema and the REPO_LOGISTICS repo from the previous example, here is how that would work:

- Create a schema for each developer: LOGISTICS_JOE, LOGISTICS_MARY, LOGISTICS_PAUL.

- Create a single schema Gitora repo for each schema. Let’s call them REPO_LOGISTICS_JOE, REPO_LOGISTICS_MARY, REPO_LOGISTICS_PAUL respectively.

- Pull from the REPO_LOGISTICS to REPO_LOGISTICS_JOE, REPO_LOGISTICS_MARY and REPO_LOGISTICS_PAUL.

From this point on, all three developers can work in their own schema and edit any package, procedure, view etc… freely, without overwriting each other’s changes or being affected by them. Using Gitora, they can create new branches in their own repo, for features or bugs they work on without affecting anyone else.

When the time comes to put it all together, they can use Gitora to merge their changes.

We wrote a few articles to get you started:

How to implement agile development in a single database

How to manage changes to tables with Single Schema Repos

Before Gitora 6, agile database development with Gitora required multiple databases to implement. With Gitora 6 you can achieve it in a single database.

We are incredibly excited to release Gitora 6. We believe it will bring a massive productivity boost to Oracle database development teams worldwide. We can’t wait for you to try it.

Gitora 6 is available now. You can download it from this link.

Are You Using Version Control for Your Database or Are You Ticking a Box?

You use a version control system to solve certain problems, to accomplish certain tasks. These are:

- Keep track of who changes what, when and why.

- If necessary, use previous versions of the code base for testing, development etc…

- Move changes between environments faster, with fewer errors during deployment.

- Improve productivity of the team (i.e. less time coordinating manually and more time developing. For example different developers working on the same parts of the code base simultaneously and merging their changes at a later time, hopefully mostly automatically.).

If you are using a version control system but not accomplishing any of the things above, are you really using a version control system?

Here is a perfectly reasonable scenario that I’ve seen in the real world. I’ve seen this scenario with teams using Git with SQL Developer, SVN/Git with another Oracle PL/SQL IDE. Invariably, they all end up here:

- In the DEV database, you make changes to the package PKG_HIRING in the HR schema and then commit the package under /HR/PKG_HIRING.sql file in Git.

- Next, another developer makes changes to the same package. He commits his changes under /HR/next_version/PKG_HIRING.sql, because… why not? It’s not like there is any enforcement.

- A third developer commits his changes to /HR/PKG_HIRING.pkb because for some reason, the tool he uses for editing uses the .pkb suffix for packages.

- The fourth developer named John, decides to copy PKG_HIRING to a file and work on it. He thinks he can move his changes to the database later. So he creates /HR/JOHN_DO_NOT_TOUCH/PKG_HIRING.sql

- A fifth developer, makes changes to the HR.PKG_HIRING package but forgets to commit the package to Git. Hey it happens…

- A sixth developer makes even more changes. He commits the package to Git under HR/PKG_HIRING.sql thereby committing not only his changes but also the fifth developers changes.

Three months later, you are tasked with deploying the latest version of the HR application to the TEST database. Which PKG_HIRING package are you going to deploy to TEST?

I actually know the answer to this question. You will deploy the one in the HR schema. It’s the safest option. I cannot help but wonder tough.. in these past three months, did John save his changes to the database? If so, how did he do it? Did he simply overwrite the package? What if some changes were lost?

At this point, I have to ask… Why do you even have a version control system? Why does that Git/SVN repo even exist? Is it useful at all or are you just doing all this just to tick a box and to be able to say that you are using a version control system?

Gitora solves all these problems above. It creates a version control system around Git and enforces it at the database level.

Let’s repeat the same steps for the six developers but this time let them use Gitora:

- In DEV, you make changes to the PKG_HIRING package in the HR schema. When you are done, you open the Gitora web app, you see the list of database objects you changed, you select the ones you want to commit (in this case PKG_HIRING) you add a comment to the commit (such as the ticket number) and click the commit button.

- The second developer follows the same process as you. He cannot make any changes to the package until you are done with it. Once you are done, he can make his changes. He opens the Gitora App, commit his changes to Git and is done. Note that, he doesn’t get to choose which folder he saves the PKG_HIRING.sql file to. Gitora creates a well structured working directory with reasonable folder and file names for database objects. The locations of these files are also standard. When there is human decision involved with naming and putting files in the file system, a lot of errors can occur. Gitora prevents these errors.

- The third developer’s IDE used the .pkb extension for his file. Since developers don’t get to name the files or choose the folders they save database object scripts to, the third developer also has no choice but to follow the same process as the first two developers. By the way, Gitora works with any IDE. You can use SQL Developer, SQL Navigator, TOAD or PL/SQL Developer. It doesn’t matter. Everyone in your team can use a different editor if they choose to do so. Gitora still works.

- The fourth developer was impatient. He created a new file under JOHN_DO_NOT_TOUCH and saved his changes to that file. With Gitora, he has no direct access to Git and the Git working folder. (Gitora is a server side solution. You don’t install Git to developer’s computers.) So he cannot do that. However, if he wants to do mischief, he still can copy the PKG_HIRING package to a new file in his computer and make changes there. Then, he can simply overwrite everything in the PKG_HIRING package with his version at a later time. This will cause some changes to be lost in the package depending on when he does it. He can even go ahead and commit his changes to Gitora. But hold on a second. At some point, either during development or testing, the mistake he made will be detected. In the first scenario, there is no way to know for certain who erased all the functioning code and exactly what code should be put back to the package. It will be trivial to use Git to figure out who deleted the perfectly functioning code from PKG_HIRING because Gitora always keeps track of who changed what, when and why. Since we can know when the mistake happened, we will know the correct version of the package to put back to. John will take the heat for his egregious mistake and merge his changes to the correct version of the package. No one wants that kind of heat. It is easy to see that Gitora incentivizes the right kind of behavior among teammates by keeping everyone accountable for their actions as well as making it possible to recover from critical mistakes.

- The fifth developer simply forgot to commit his changes to Git. With Gitora there are two ways this can be caught. First, the sixth developer who wants to edit the package will contact the fifth developer to ask when the package will be available. Second, Gitora web app always shows which objects are locked by whom. So before preparing a deployment, you can always check if there are any database objects that are locked.

- In the first scenario, the sixth developer committed fifth developers changes along with his. With Gitora, the sixth developer will always commit only his changes because there is no way he can gain access to the PKG_HIRING without the fifth developer releasing the package first. Gitora enforces that if an object is being edited by someone, no one else can edit it. With Gitora, version control is not something you do on the side but it is tightly integrated into your workflow and enforced.

And finally, three months later, you are tasked with preparing the deployment to TEST. How are you going to move the changes to the TEST database? With Gitora, all you need to do is to open the Gitora app, connect to the TEST database and pull the HR repo from DEV to TEST and you are done.

Going back to the beginning of the article; Gitora makes Git a useful version control system for the Oracle Database.

As shown in the second scenario with Gitora you can:

- Keep track of who changes what, when and why.

We caught John misbehaving. - If necessary, use previous versions of the code base for testing, development etc…

We recovered an old version of PKG_HIRING and have John merge his changes to it. - Move changes between environments faster, with fewer errors during deployment.

Done via point and click Gitora Web Application. - Improve productivity of the team (i.e. less time coordinating manually and more time developing. For example different developers working on the same parts of the code base simultaneously and merging their changes at a later time, hopefully mostly automatically.).

The second scenario reduced manual coordination significantly. Gitora can do a lot more. You can have different teams work on the same code base at the same time and merge their changes just like Java, JavaScript developers. However that’s beyond the scope of this article. Please read this article and this article to learn more about how you can implement parallel development with Gitora.

DakhaBrakha

A barely related side note: as a case study in creative folk-culture appropriation to modern forms, I recommend the Ukrainian musical film Hutsulka Ksenya. The plot revolves a young American whose late father leaves him his fortune on the condition he marry a Ukrainian woman. Distinctly unenthusiastic he visits the Carpathian region (presumably Zakarpattia Oblast, from which the Pavlik family emigrated) and falls for a young Hutsul woman.... I won't say more, its a terribly creative film and thoroughly enjoyable.

Hutsuls, as an aside, are not strictly speaking Ukrainians in the ethno-cultural sense, but in the broader sense of Ukraine as a multi-cultural nation.

Isaac of Syria in Dostoevsky

On recurring theme is an attempt to uncover the influences behind the portrait of the staretz Zosima. Many figures have been sited, including the famous Tikon of Zadonsk, of which there is an entire book dedicated to the topic. To my mind, however, the most obvious parallel to the teachings of Zosima is the 7th century ascetic Isaac of Syria. There could not be more clear parallels between his ascetic writings and the long chapter on Zosima's homilies in Karamazov. I liberally quote from two sites with supporting details and illustrations

------------------

There is an interesting connection between St Isaac of Syria and Dostoevsky. The latter owned an 1858 edition of the Slavonic translation of the Homilies by St Paisius Velichkovsky (Victor Terras, A Karamazov Companion: Commentary on the Genesis, Language, and Style of Dostoevsky’s Novel [Madison: U of Wisconsin, 1981], p. 22). Furthermore, Dostoevsky mentions St Isaac’s Ascetical Homilies by name twice in The Brothers Karamazov. The first time is in Part I, Book III, Chapter 1, ‘In the Servants’ Quarters’, where the narrator observes that Grigory Vasilievich, Fyodor Karamazov’s manservant, ‘somewhere obtained a copy of the homilies and sermons of “Our God-bearing Father, Isaac the Syrian”, which he read persistently over many years, understanding almost nothing at all of it, but perhaps precisely for that reason prizing and loving it all the more’ (Fyodor Dostoevsky, The Brothers Karamazov, trans. Richard Pevear and Larissa Volokhonsky [NY: Vintage, 1991], p. 96). Dostoevsky then mentions the book again in 4.11.8, this time in the rather more sinister context of Ivan’s third meeting with Smerdyakov, when the latter 'took from the table that thick, yellow book, the only one lying on it, the one Ivan had noticed as he came in, and placed it on top of the bills. The title of the book was The Homilies of Our Father among the Saints, Isaac the Syrian. Ivan Fyodorovich read it mechanically' (Dostoevsky, p. 625).

But more importantly, Victor Terras has pinpointed a number of St Isaac’s teachings that make a definite appearance in the words of Elder Zosima in II.VI.3, especially in (g) ‘Of Prayer, Love, and the Touching of Other Worlds’ (Dostoevsky, pp. 318-20), and (i) ‘Of Hell and Hell Fire: A Mystical Discourse’ (Dostoevsky, pp. 322-4). Terras quotes the following passage from ‘Homily Twenty-Seven’ as being ‘important for the argument of The Brothers Karamazov’ (Terras, p. 23):

Sin, Gehenna, and Death do not exist at all with God, for they are effects, not substances. Sin is the fruit of free will. There was a time when sin did not exist, and there will be a time when it will not exist. Gehenna is the fruit of sin. At some point in time it had a beginning, but its end is not known. Death, however, is a dispensation of the wisdom of the Creator. It will rule only a short time over nature; then it will be totally abolished. Satan’s name derives from voluntarily turning aside [the Syriac etymological meaning of satan] from the truth; it is not an indication that he exists as such naturally. (Ascetical Homilies, p. 133)

Terras may, however, be on the wrong trail with this particular passage, though not perhaps with the rest of his parallels, since according to a note in the translation, this particular homily only exists in Syriac (Ascetical Homilies, p. 133), and does not appear to have been available in any translation Dostoevsky would have read (Introduction, Ascetical Homilies, pp. lxxvi-lxxvii). Another interesting, though less important, discrepancy, is that Pevear and Volokhonsky, in their note on the name of St Paisius (he is referenced in I.I.5 [Dostoevsky, p. 27], and footnoted on p. 780 of Pevear’s and Volokhonsky’s translation), date Dostoevsky’s edition of the Elder’s translation of St Isaac to 1854 rather than 1858. Furthermore, J.M.E. Featherstone lists among St Paisius's works, Svjatago otca našego Isaaka Sirina episkopa byvšago ninevijskago, slova duxovno-podvižničeskija perevedennyja s grečeskago.... (Moscow, 1854), thus making Pevear and Volokhonsky's date more likely, it would seem ('Select Bibliography', The Life of Paisij Velyčkovs'kyj, trans. J.M.E. Featherstone [Cambridge, MA: Harvard U, 1989], p. 163 ).

I just wanted to highlight briefly this interesting connection. At an even deeper level, however, it has been picked up on, for one, by Archimandrite Vasileios of Iveron. Having considered the ‘artistic’ gifts of St Isaac and the spiritual insight of Dostoevsky, he concludes, ‘Thus, whether you read Abba Isaac, or Dostoevsky, in the end you get the same message, grace and consolation’ (‘Από τον Αββά Ισαάκ’, p. 100).

source: http://logismoitouaaron.blogspot.com/2009/02/this-glory-of-orientst-isaac-syrian.html

Though the teachings of Elder Zosima from Dostoevsky’s Brothers Karmazov seem exotic to many western readers and possibly unorthodox, they in fact show a remarkable similarity to those of a favorite 7th century eastern saint, St Isaac the Syrian. We know that Dostoevsky owned a newly-available translation of St Isaac’s Ascetical Homilies, and this volume is in fact mentioned by name twice in the novel, though in seemingly inconsequential contexts. Dostoevsky was no doubt deeply affected by the saint’s spirituality, and I think Zosima’s principle views in fact reflect and are indebted to those of St Isaac. Below I will list some of these distinctive views, with illustrating quotes from both the fictional Elder Zosima and St Isaac himself. (And note: these were simply the quotes that I could find very easily; I’m sure more digging would find even more striking parallels)

Love for all creation:

Elder Zosima: “Love God’s creation, love every atom of it separately, and love it also as a whole; love every green leaf, every ray of God’s light; love the animals and the plants and love every inanimate object. If you come to love all things, you will perceive God’s mystery inherent in all things; once you have perceived it, you will understand it better and better every day. And finally you will love the whole world with a total, universal love.”

St Isaac: “What is a merciful heart? It is a heart on fire for the whole of creation, for humanity, for the birds, for the animals, for demons, and for all that exists. By the recollection of them the eyes of a merciful person pour forth tears in abundance. By the strong and vehement mercy that grips such a person’s heart, and by such great compassion, the heart is humbled and one cannot bear to hear or to see any injury or slight sorrow in any in creation. For this reason, such a person offers up tearful prayer continually even for irrational beasts, for the enemies of the truth, and for those who harm her or him, that they be protected and receive mercy. And in like manner such a person prays for the family of reptiles because of the great compassion that burns without measure in a heart that is in the likeness of God.”

Responsibility for all:

Elder Zosima: “There is only one salvation for you: take yourself up, and make yourself responsible for all the sins of men. For indeed it is so, my friend, and the moment you make yourself sincerely responsible for everything and everyone, you will see at once that it is really so, that it is you who are guilty on behalf of all and for all. Whereas by shifting your own laziness and powerlessness onto others, you will end by sharing in Satan’s pride and murmuring against God. ”

St Isaac: “Be a partaker of the sufferings of all…Rebuke no one, revile no one, not even those who live very wickedly. Spread your cloak over those who fall into sin, each and every one, and shield them. And if you cannot take the fault on yourself and accept punishment in their place, do not destroy their character.”

Love is Paradise on Earth:

Elder Zosima: “”Gentlemen,” I cried suddenly from the bottom of my heart, “look at the divine gifts around us: the clear sky, the fresh air, the tender grass, the birds, nature is beautiful and sinless, and we, we alone, are godless and foolish, and do not understand that life is paradise, for we need only wish to understand, and it will come at once in all its beauty, and we shall embrace each other and weep”

St Isaac: “Paradise is the love of God, wherein is the enjoyment of all blessedness, and there the blessed Paul partook of supernatural nourishment…Wherefore, the man who lives in love reaps life from God, and while yet in this world, he even now breathes the air of the resurrection; in this air the righteous will delight in the resurrection. Love is the Kingdom, whereof the Lord mystically promised His disciples to eat in His Kingdom. For when we hear Him say, “Ye shall eat and drink at the table of my Kingdom,” what do we suppose we shall eat, if not love? Love is sufficient to nourish a man instead of food and drink.”

Non-literal ‘fire’ of hell:

Elder Zosima: “Fathers and teachers, I ask myself: “What is hell?” And I answer thus: “The suffering of being no longer able to love.”…People speak of the material flames of hell. I do not explore this mystery, and I fear it, but I think that if there were material flames, truly people would be glad to have them, for, as I fancy, in material torment they might forget, at least for a moment, their far more terrible spiritual torment. And yet it is impossible to take this spiritual torment from them, for this torment is not external but is within them”

St Isaac: “As for me I say that those who are tormented in hell are tormented by the invasion of love. What is there more bitter and violent than the pains of love? Those who feel they have sinned against love bear in themselves a damnation much heavier than the most dreaded punishments. The suffering with which sinning against love afflicts the heart is more keenly felt than any other torment. It is absurd to assume that the sinners in hell are deprived of God’s love. Love is offered impartially. But by its very power it acts in two ways. It torments sinners, as happens here on earth when we are tormented by the presence of a friend to whom we have been unfaithful. And it gives joy to those who have been faithful. That is what the torment of hell is in my opinion: remorse”

source: https://onancientpaths.wordpress.com/2013/07/27/the-elder-zosima-and-st-isaac-the-syrian/

Agile PL/SQL Development

Gitora 6, the latest version of the source control tool for the Oracle Database, enables PL/SQL developers implement agile development practices in a single database.

With Gitora 6, developers can work on the same code base (packages, procedures etc...) at the same time, in the same database.

Learn more at http://blog.gitora.com/introducing-gitora-6/

Intentionality Mind and Nature

"Neither doctrine nor metaphysics need be immediately invoked to see the impossibility of rational agency within a sphere of pure nature; a simple phenomenology of what it is we do when we act intentionally should suffice. The rational will, when freely moved, is always purposive; it acts always toward an end: conceived, perceived, imagined, hoped for, resolved upon. Its every act is already, necessarily, an act of recognition, judgment, evaluation, and decision, and is therefore also a tacit or explicit reference to a larger, more transcendent realm of values, meanings, and rational longings. Desire and knowledge are always, in a single impulse, directed to some purpose present to the mind, even if only vaguely. Any act lacking such purposiveness is by definition not an act of rational freedom. There are, moreover, only two possible ways of pursuing a purpose: either as an end in itself or for the sake of an end beyond itself. But no finite object or purpose can wholly attract the rational will in the latter way; no finite thing is desirable simply in itself as an ultimate end. It may, in relative terms, constitute a more compelling end that makes a less compelling end nonetheless instrumentally desirable, but it can never constitute an end in itself. It too requires an end beyond itself to be compelling in any measure; it too can evoke desire only on account of some yet higher, more primordial, more general disposition of reason’s appetites. Even what pleases us most immediately can be intentionally desired only within the context of a rational longing for the Good itself. If not for some always more original orientation toward an always more final end, the will would never act in regard to finite objects at all. Immanent desires are always in a sense deferred toward some more remote, more transcendent purpose. All concretely limited aspirations of the will are sustained within formally limitless aspirations of the will. In the end, then, the only objects of desire that are not reducible to other, more general objects of desire, and that are thus desirable entirely in and of themselves, are those universal, unconditional, and exalted ideals, those transcendentals, that constitute being’s abstract perfections. One may not be, in any given instant, immediately conscious that one’s rational appetites have been excited by these transcendental ends; I am not talking about a psychological state of the empirical ego; but those ends are the constant and pervasive preoccupation of the rational will in the deepest springs of its nature, the source of that “delectable perturbation” that grants us a conceptual grasp of finite things precisely by constantly carrying us restlessly beyond them and thereby denying them even a provisional ultimacy.

In fact, we cannot even possess the barest rational cognizance of the world we inhabit except insofar as we have always already, in our rational intentions, exceeded the world. Intentional recognition is always already interpretation, and interpretation is always already judgment. The intellect is not a passive mirror reflecting a reality that simply composes itself for us within our experience; rather, intellect is itself an agency that converts the storm of sense-intuitions into a comprehensible order through a constant process of interpretation. And it is able to do this by virtue of its always more original, tacit recognition of an object of rational longing—say, Truth itself—that appears nowhere within the natural order, but toward which the mind nevertheless naturally reaches out, as to its only possible place of final rest. All proximate objects are known to us, and so desired or disregarded or rejected, in light of that anticipated finality. Even to seek to know, to organize experience into reflection, is a venture of the reasoning will toward that absolute horizon of intelligibility. And since truly rational desire can never be a purely spontaneous eruption of the will without purpose, it must exhibit its final cause in the transcendental structure of its operation. Rational experience, from the first, is a movement of rapture, of ecstasy toward ends that must be understood as—because they must necessarily be desired as—nothing less than the perfections of being, ultimately convertible with one another in the fullness of reality’s one source and end. Thus the world as something available to our intentionality comes to us in the interval that lies between the mind’s indivisible unity of apprehension and the irreducibly transcendental horizon of its intention—between, that is, the first cause of movement in the mind and the mind’s natural telos, both of which lie outside the composite totality of nature."

DB Hart, You are Gods. University of Notre Dame Press, April 2022